flowchart TD

A[RAG Evaluation] --> B[Retrieval Quality]

A --> C[Generation Quality]

A --> D[End-to-End Evaluation]

B --> E[Precision, Recall, F-score,<br> NDCG, MRR...]

C --> F[Accuracy, Faithfulness,<br> Relevance, ROUGE/BLEU/METEOR,<br> Hallucination...]

D --> G[Helpfulness, Consistency,<br> Conciseness,<br> Latency, Satisfaction...]

Challenges and insights in developing and evaluating RAG assistants

Generative AI and Official Statistics Workshop 2025

Insee

12/05/2025

Initial example

Is this a good answer ? Hard to tell

Initial example

Both answers are better: precise, contextual

Why RAG ?

- Retrieval-Augmented Generation (RAG) combines:

- Information retrieval from a knowledge base

- Text generation using aLLM with contextualized information

Objective:

- Produce accurate information (no hallucination)

- Produce verifiable information (source citation)

- Propose up-to-date answer

- Interpretation of the meaning of the question, unlike traditional text queries based on bags of words

Why doing that ?

- Asking Google is great but user needs to have good keywords

- Assume user knows what she wants…

- … and have some literacy

- LLMs are more and more used as search engine

- How can we best structure information in our website for the response to be relevant ?

- We have 20+ years of experience in understanding how Google works,

- We also need to understand how LLMs work

- Experimenting RAG is a good way for that

Typical RAG pipeline

Typical RAG pipeline

Challenge

Challenge

- Which embedding should I choose ?

- Is the best performing embedding in MTEB relevant for my use case ?

- Which backend should I use for embedding ? (VLLM, Ollama…)

- Which vector database should I use ? (ChromaDB, QDrant…)

- How to make my vectordatabase always available to my RAG in production ?

- Should I only use semantic search or hybrid search ?

- How many documents should I retrieve ?

- Should I rerank ? How ?

- …

Challenge

RAG is hard

RAG is hard RAG needs evaluation

- Evaluation challenges:

- Is the retrieved context relevant?

- Is the generation faithful to the context?

- Is the answer useful to end users (e.g. analysts, statisticians)?

- Generic metrics are not that useful

- Better to define use case related objectives

- Adapt pipelines to that end

- Existing plug and play frameworks show limitations

- To build good RAG, need to go on details

Evaluation

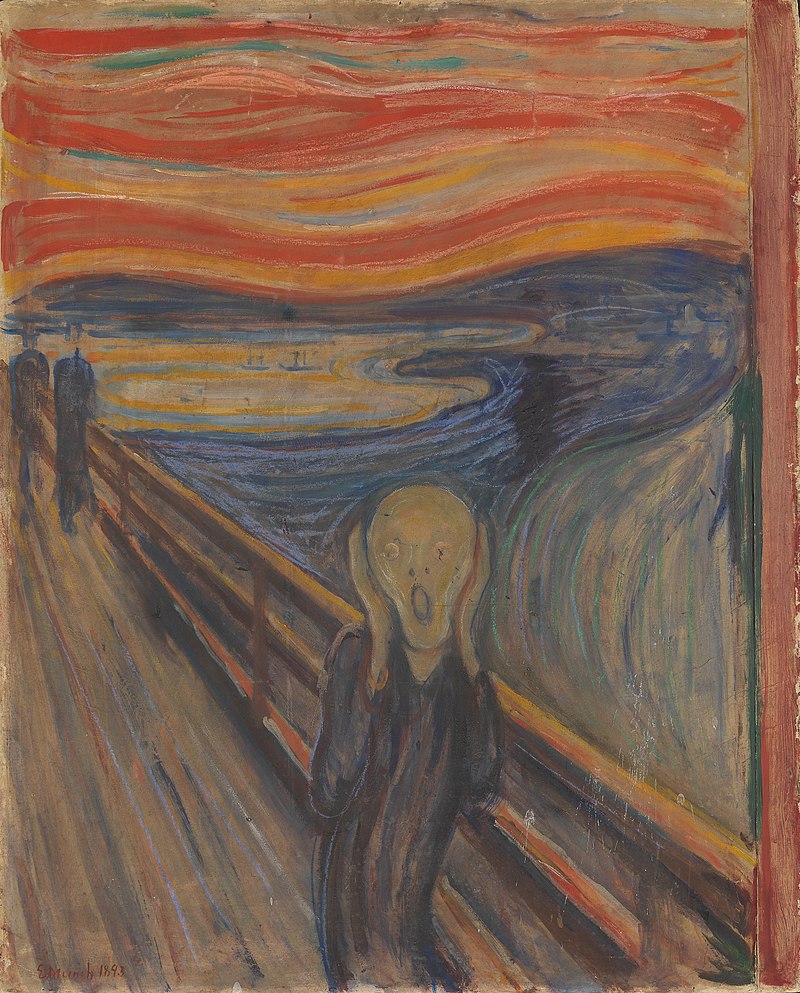

Many metrics exist

An attempt to classify RAG metrics

They are not that much helpful

We need to know what we want

- Best way to go forward : read Hamel Husain blog

- Better to start with limited set of metrics

What do we want ?

- No hallucination !

- How many invented references or facts ?

- Hallucination rate

- Retrieve relevant content:

- Does the retriever find the relevant page/document for a given question ?

- Topk retrieval

- Have a useful companion to official statistics

- Given the sources, is the answer satisfactory ?

- Satisfaction rate

How did we evaluate ?

Methodology

- Around 60k pages from insee.fr

- Evaluating RAG on different dimensions

- Main model used:

- Embedding:

BAAI/bge-multilingual-gemma2 - Generation:

mistralai/Mistral-Small-24B-Instruct-2501

- Embedding:

- Collected expert domain Q&A

- Small sample: 62 questions

- More questions will come later

Technical details

- 2

VLLMsinstance (OpenAI API compatible endpoints) in backend- Running on Nvidia H100 GPU

ChromaDBas vector databaseLangchainfor document handlingStreamlitfor front end user interface

Note

This is a quite demanding pipeline :

- Embedding and generation instances must be available at each user query

1. Collect expert level annotations

To challenge retrieval before any product launch

1. Collect expert level annotations

To challenge retrieval before any product launch

1. Collect expert level annotations

To challenge retrieval before any product launch

- Helped us to iterate over a “satisfying” strategy regarding parsing and chunking

- Need medium sized chunks (around 1100 tokens)

- Not more than 1500 tokens to avoid lost in the middle

- Cast the tables aside

- Hard to chunk, hard to interpret without

- Prioretizing text content.

Note

This dataset can be later on used for any parametric change in our RAG pipeline

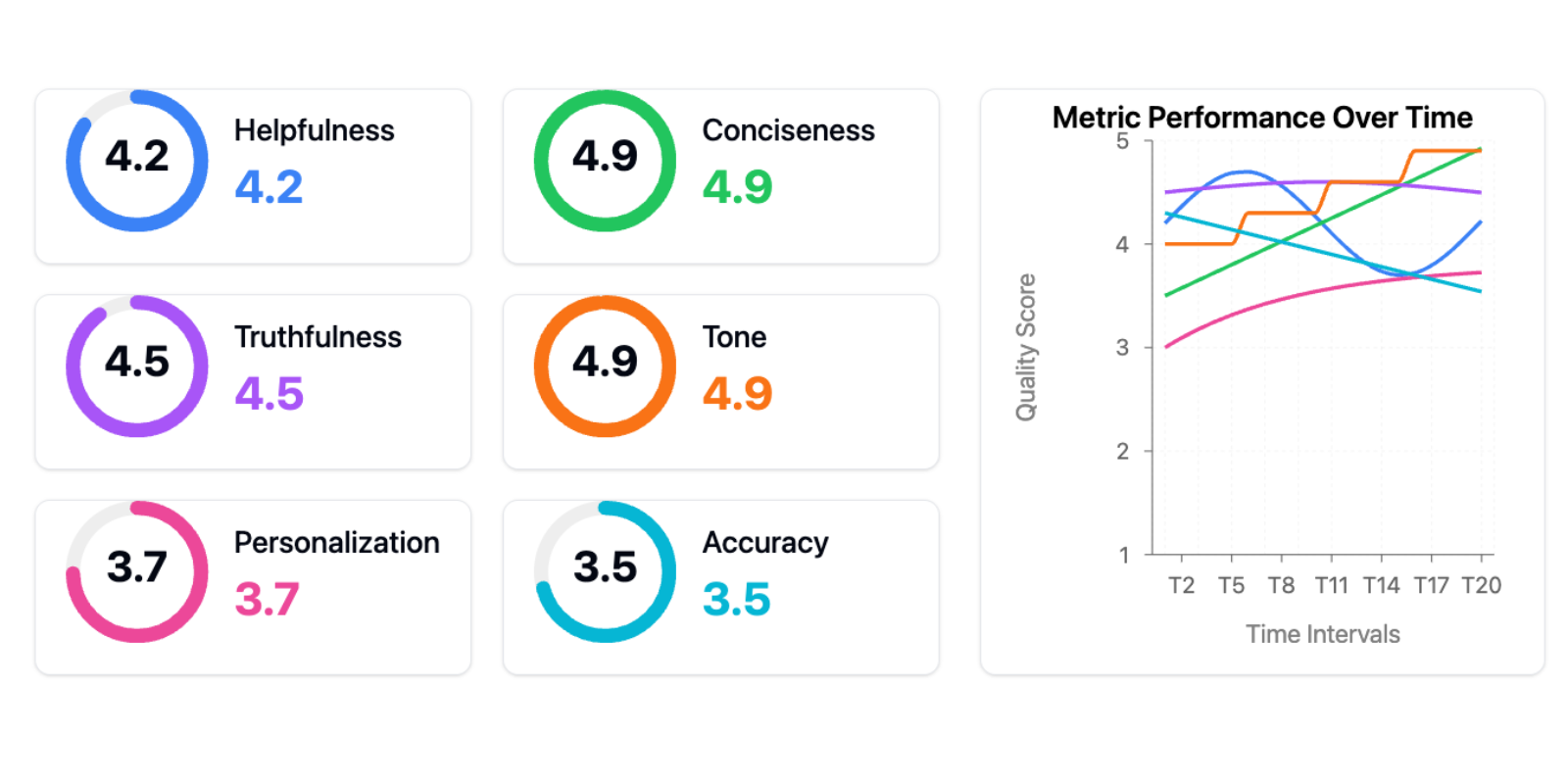

2. Collect user feedbacks

To ensure we satisfy user needs

- Having an interface gamifies the evaluation process…

- … which can help collecting evaluation

- We want users to give honest feedbacks on different dimensions:

- Sources used, quality of the answer

- Free form to understand for manual inspection to understand what does not work

- Simple feedback (👍️/👎️) to track satisfaction rate

Astuce

We need good satisfaction rates before A/B testing !

Retriever quality

- Helped monitor retriever quality

- Helped understand problems in website parsing

topk = [

{top: 1, value: 21.31, version: "Complete set\nof questions"},

{top: 2, value: 34.43, version: "Complete set\nof questions"},

{top: 3, value: 37.70, version: "Complete set\nof questions"},

{top: 4, value: 40.98, version: "Complete set\nof questions"},

{top: 5, value: 45.90, version: "Complete set\nof questions"},

{top: 6, value: 47.54, version: "Complete set\nof questions"},

{top: 7, value: 49.18, version: "Complete set\nof questions"},

{top: 8, value: 50.82, version: "Complete set\nof questions"},

{top: 9, value: 50.82, version: "Complete set\nof questions"},

{top: 10, value: 50.82, version: "Complete set\nof questions"},

{top: 1, value: 32.35, version: "Restricted to well\nparsed sections"},

{top: 2, value: 52.94, version: "Restricted to well\nparsed sections"},

{top: 3, value: 58.82, version: "Restricted to well\nparsed sections"},

{top: 4, value: 64.71, version: "Restricted to well\nparsed sections"},

{top: 5, value: 70.59, version: "Restricted to well\nparsed sections"},

{top: 6, value: 73.53, version: "Restricted to well\nparsed sections"},

{top: 7, value: 76.47, version: "Restricted to well\nparsed sections"},

{top: 8, value: 79.41, version: "Restricted to well\nparsed sections"},

{top: 9, value: 79.41, version: "Restricted to well\nparsed sections"},

{top: 10, value: 79.41, version: "Restricted to well\nparsed sections"}

]chart = Plot.plot({

y: {

domain: [0, 100],

grid: true,

label: "Share of answer that cite a correct document (%)"

},

x: {

label: "Number of retrieved documents",

type: "linear",

},

color: {

range: ["#4758AB", "#ff562c"]

},

marks: [

Plot.ruleY([0]),

Plot.line(topk, {

x: "top",

y: "value",

stroke: "version"

}),

Plot.dot(topk, {

x: "top",

y: "value",

stroke: "version",

}),

Plot.text(topk, Plot.selectLast({

x: "top",

y: "value",

z: "version",

text: "version",

textAnchor: "start",

dx: 4

}))

],

width: 1100,

height: 400,

marginRight: 200,

style: {

fontWeight: "bold"

}

})RAG behavior

- Helped finding a satisfying prompt

chart2 = Plot.plot({

marks: [

Plot.barY(hallucination_data, {

x: "method",

y: "value",

fill: "#ff562c"

}),

Plot.text(hallucination_data, {

x: "method",

y: "value",

text: d => d.value.toFixed(1) + "%",

dy: -8,

fontWeight: "bold"

})

],

y: {

domain: [0, 100],

label: "Share of answers with at least one invented reference (%)"

},

width: 550,

height: 400,

fontWeight: "bold"

})First user feedbacks

- First feedbacks are mostly positive :

- Streaming is fast

- Main negative feedbacks:

- Retriever gives outdated papers (how to prioretize recent content?)

RAG demo: rag-insee-demo-unece.lab.sspcloud.fr

Easy to put into production thanks to:

- Our modular approach (

ChromaorVLLMAPIs) - Our

KubernetesinfrastructureSSPCloud

Remaining challenges

- Need to prioritize recent content

- Need to prioritize national statistics unless question about specific area

Conclusion

- RAG quality depends first (and IMO mostly) on how documents are parsed and processed

- Back to information retrieval problem !

- See Barnett et al. (2024)

- Lot of RAG resources focused on short documents…

- We struggled for a long time because of poor technical choices

- Other choices (e.g. reranking, generative models…) can be handled after having a good pipeline

Conclusion

llms.xt(llmstxt.org/): proposal to normalize website content for LLM ingestion- Markdown based approach

- Some big actors have adopted that norm

- After SEO, we will have GEO (Generative Engine Optimization)

- We want an easy access to reliable information

References

Barnett, Scott, Stefanus Kurniawan, Srikanth Thudumu, Zach Brannelly, et Mohamed Abdelrazek. 2024. « Seven Failure Points When Engineering a Retrieval Augmented Generation System ». https://arxiv.org/abs/2401.05856.

Husain, Hamel. 2024. « Your AI Product Needs Evals ». https://hamel.dev/blog/posts/evals/.

———. 2025. « A Field Guide to Rapidly Improving AI Products ». https://hamel.dev/blog/posts/field-guide/.

Generative AI and Official Statistics Workshop 2025